Who Needs Stock Sound? Adobe Firefly Now Generates Audio Effects on Command

With just a prompt or your voice, creators can now use Firefly to produce AI sound effects built for speed, story, and commercial use.

Adobe is turning concept into creation: After teasing its experimental sound effects tool, Project Super Sonic, last October, the company is now bringing it to life with a launch inside its Firefly app. Available in beta, the Generate Sound Effects feature does what it says: it quickly creates custom sound effects, atmospheric background noise, and audio associated with video content, using either a text prompt or voice input.

But that’s not the only Adobe Firefly announcement being made. There’s a new capability called Text to Avatar, which lets creators generate a virtual avatar for use in demo reels, presentation videos, or any other purpose. It’s a service now available in beta.

The company is also expanding its partner ecosystem with new models from Google’s Veo 3 (with audio), Moonvalley’s Marey, and Runway’s Gen-4 video. Lastly, it’s adding new video controls, giving creators greater flexibility in shaping composition, pacing, and style.

Democratizing Sound Effect Creation for All

AI has already empowered creators to produce entire works of art, from imagined photographs to convincing short films. Now, Adobe is extending that power to sound, introducing AI-generated sound effects to enhance the creative process. Specifically, it produces two types of audio: impact sounds that capture the tone, nature sounds, and dramatic weight; and atmospheric tracks, such as traffic and other ambience tracks, to provide location context.

Some creators may struggle to find the appropriate audio effect to go with their video, and scouring through Getty Images, Epidemic Sound, Artlist, and iStock can be time-consuming. In addition, filmmakers are often subject to having their work revolve around stock sound effects, rather than the other way around. Adobe’s sound effects generator appears to be a way to produce the right audio for the right scene.

Adobe isn’t the first to provide AI-generated sound effects. Other companies such as ElevenLabs, Soundful, and AIVA have been in the market for some time. However, Adobe believes its advantage lies in its pitch to creators, studios, and brands: Similar to the images and videos created by Firefly, the sound effects will be “commercially safe” to use. More specifically, Adobe claims the generated assets won’t violate any intellectual property, copyright, or trademark.

What’s changed with the technology since it was introduced as a so-called “sneak” at Adobe Max 2024? “After the reception we got from Super Sonic, the team is always doing this quality analysis, customer engagement, data set updates on the high-quality training data side, to always try to take the model and make it not only better understanding the problems, but generating higher-quality sound effects,” Alexandru Costin, Adobe’s vice president of generative AI, says in a briefing. From there, the team needed to make it production-ready. That entails ensuring generations were fast—”faster than real-time”— and features a small non-linear editor (NLE) explicitly made for this workflow.

Costin states that sound effect generation is currently limited to desktops, but plans are in place to bring it to mobile devices. Adobe needs to refine the user experience for smaller screens.

During a brief demonstration, Adobe’s Senior Professional Video Evangelist, Shawn McDaniel, showed a short video clip he generated using Firefly. However, it was without any sound, so he used a simple text prompt to create several effect options. Then, after sampling each one, he chose the best one to add ambient sound to the footage.

In another example, he wanted to insert the sound of a zipper closing, but instead of generating the effect via text, McDaniel opted to use his voice. Pressing the microphone button, he vocalized what a zipper sounded like, and then Firefly processed the audio along with the inflection and produced four different variations.

Creators can add up to five sound effects to their video, but they can be downloaded. In addition, Adobe supports these audio clips being shareable on its other professional desktop tools.

Create Your Bespoke AI Avatar

Creating a video but don’t have the budget to hire talent? Don’t want to hire a professional but still want your visual presentation to look professional? Adobe offers a solution called Text to Avatar. Available now in beta through Firefly, creators can choose an avatar style as a stand-in, insert a background, and provide a custom script, instantly creating a bespoke presenter.

It’s not close to being a digital twin, as it’s only reciting from the text provided to it. Don’t expect it to facilitate Q&A afterwards, but this tool will be useful for those who want to create real estate videos, marketing assets, demo reels, and more without incurring thousands of dollars in production costs.

Again, Adobe isn’t first to the Text to Avatar space, but its selling point is probably its approach to using licensed work. However, another difference is that Adobe considers this technology for bespoke work. In contrast, technology from HeyGen, Character.ai, Synthesia, Veed, and others is more suitable for organizations and creators who want these avatars to resemble their digital twin.

New Video Creation Tools

In addition to sound effect generation, Adobe is introducing several new video controls to Firefly. These capabilities are designed to give creators “more frame-by-frame precision” over their work.

The first is composition referencing, which guides framing and layout by referencing a still or visual sample. Keyframe cropping is the next option, enabling composition refinement frame by frame, and something Adobe states is suited for social or cinematic formats. Finally, there are style presets, which will apply a universal visual look throughout the video.

New Partner Models Added to Firefly

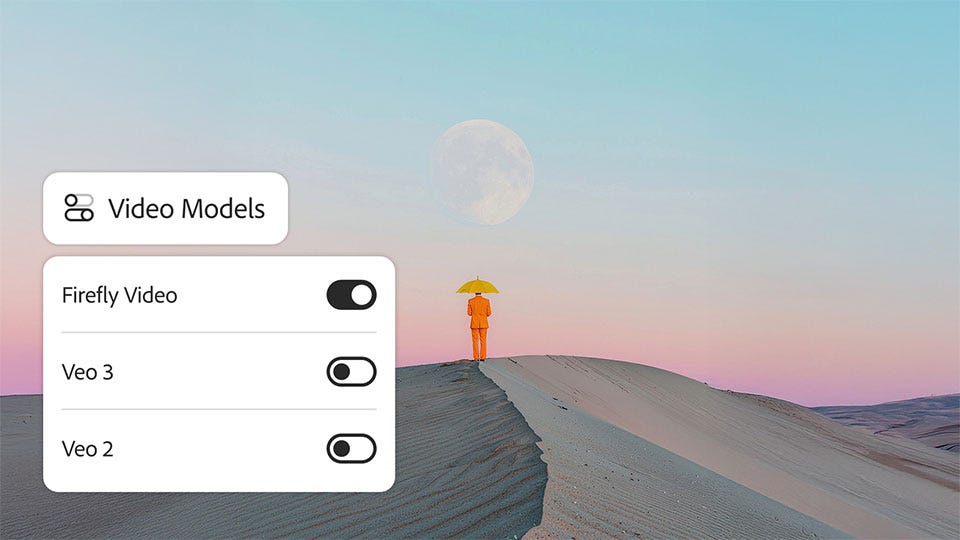

The last announcement Adobe is making regarding Firefly involves the models the app supports. OpenAI and Google (Imagen 3, Veo 2, Flux 1.1) were the first to bring their LLMs to Firefly, followed quickly by models from Ideogram (3.0), Pika, Luma (Ray2), Google (Imagen 4 and Veo 3) and Runway (Gen-4 Image). Today, Costin reveals Firefly is adding Moonvalley’s Marey, Google’s Veo 3 (with audio), Pika’s 2.2, Luma’s AI Ray2, and Topaz Labs’ image and video upscalers.

However, not everything will be immediately available:

Google’s Veo 3 with audio in text to video is now in the Firefly app

Runway’s Gen-4 video can be accessed in Firefly Boards

Moonvalley’s Mareey and Topaz Labs’ image and video upscalers will both be coming to Firefly Boards

Google’s Veo 3 with audio in image to video, Pika 2.2, and Luma AI’s Ray2 will all soon be in the Firefly app

He reiterates Adobe’s policy that all work produced using these partner models adheres to the same standards as Adobe’s core models. Content credentials will be embedded into all generated content, and all third-party model makers have agreed not to train on the prompts used or the assets created through Adobe services.