Ai2 Bets on MolmoAct to Help Robots Navigate the Real World

Ai2’s newest model teaches robots to “think” in three dimensions, plan their moves, and act with greater precision and transparency.

Ai2, or the Allen Institute for AI, is unveiling a new class of models designed to help robots move through the world with greater spatial awareness. Known as Action Reasoning Models (ARM), it’s billed as giving machines spatial awareness that text-based inputs alone can’t deliver. The first in this ARM family is MolmoAct, an open-source generalized model built atop Ai2’s Molmo LLM. It features seven billion parameters and is trained on around 12,000 “robot episodes” from real-world environments.

With its approach to vision-language modeling (VLM), Ai2 aims to fast-track Embodied AI, the integration of AI into physical systems. When asked what sets MolmoAct apart, the institute highlights several key advantages, including providing much-needed transparency, being genuinely open source, and requiring significantly less data than current offerings.

“Embodied AI needs a new foundation that prioritizes reasoning, transparency, and openness,” Ali Farhadi, Ai2’s chief executive, says in a statement. “With MolmoAct, we’re not just releasing a model; we’re laying the groundwork for a new era of AI, bringing the intelligence of powerful AI models into the physical world. It’s a step toward AI that can reason and navigate the world in ways that are more aligned with how humans do—and collaborate with us safely and effectively.”

Moving From Perception to Action

MolmoAct’s promise is that it converts two-dimensional images into three-dimensional spatial plans. Machines can use this information to better reason space, movement, and time to plan their next steps, both literally and figuratively speaking.

Although it’s a type of vision language model, Ai2 describes it more like a vision language action (VLA) model. According to Ranjay Krishna, the firm’s computer vision research lead, MolmoAct takes “an input image—so a scene that’s in front of it—and some command—”put the plate in the dishwasher”— in language, and it outputs torque or changes in the robotic arm, and those changes get directly translated into movement in your robot.”

But that’s not all: It utilizes Chain of Thought (CoT) reasoning, a challenging task in robotics due to the difficulty of describing motion in language. What this means is that when instructed to pick up something, the scene is converted into a 3D space before the model draws a line to illustrate how the robot will move around in the world. It won’t execute actions until it has figured out its plan for the space.

“This allows you to do a lot of interesting things,” Krishna explains. “First, it allows you to convert this very ambiguous statement…into something that’s very grounded and precise. The robot now knows how it’s going to move its arms in space. You know how the robot is going to move before it even moves. And so, you could potentially intervene and stop an execution if you think it’s going to do something you don’t want it to do. It also allows you to steer it, so you can now describe things, not just in language, but in this space itself.”

MolmoAct reasoning goes through three stages. In the first, the model outputs spatially-grounded perception tokens. These are unique pre-trained tokens that have been extracted with an autoencoder, a type of neural network architecture, and allow the model to estimate distances between objects and incorporate the information into MolmoAct’s reasoning. Next, the model predicts the sequence of image-space waypoints that are used as intermediate goals, providing a visual outline of how the task should be completed. Lastly, MolmoAct generates actions for the robot based on the waypoints.

Ai2 argues that there are problems with many of the models currently being used in robotics. “If you dig deeper, you quickly realize that these models, all of these applications, are very narrow, they’re very fragile, and they’re very fragmented,” Krishna states. He points out that existing models work well on the one job that they’re programmed to do, but can’t adjust if something in the scene changes. In addition, there’s a lack of transparency, preventing developers and programmers from understanding why something isn’t working. Krishna calls out Google’s Gemini, Nvidia’s GR00T, and Figure AI’s Helix as some models that operate in “big black boxes or blue boxes.”

“That’s really where MolmoAct comes in. It’s our move to move our rigorous, scientific approach into the physical world,” Krishna contends. “And…just like Molmo, MolmoAct is completely open, meaning we’re going to release all the weights, all the data, all the code, and all the evaluations.”

How MolmoAct Does More With Less Data

Data efficiency is also touted as a key advantage of using this new robotic model. “Unlike Nvidia [and Google] Gemini, which has a lot more data and a lot more resources than we do, by allowing our model to ground its actions and reason through that in 3D space, we need a lot less data to actually make these models more performant, and so we’re currently matching or even outperforming a lot of these top models from Nvidia and other places, all with much, much less data,” Krishna proclaims.

Using table cleaning as an example, he describes how most robotic models learn by taking actions, reassessing, and then potentially changing direction. This process tends to result in “jittery behaviors.” But with MolmoAct, it converts an ambiguous language command into a plan. By drawing a specific trajectory and having all actions follow that plan, the model needs less data, he claims. Every action is directed towards one particular end goal rather than constantly readjusting.

“You can think about this in terms of if you make a decision about how to move your body, and then I replace your brain with someone else’s brain, and then they make a decision about how to move your body next, and then replace that again with a new person,” Krishna explains. “That’s how we’ve been training a lot of VLAs. And now you can think about this as being one consistent reasoning process that decides this is the cup I want to pick up. And so every action is towards that specific action through that end goal.”

Underscoring this point, Ai2 reveals MolmoAct-7B took a day to be pre-trained on 26.3 million samples on a cluster of 256 Nvidia H100 GPUs. Fine-tuning on 64 H100s took around two hours. By comparison, Nvidia’s GR00T-N1-2B model used 600 million samples with 1,024 H100s.

How Does MolmoAct Perform?

So how does Ai2’s model measure up against its rivals? Before getting into the evaluations, Jiafei Duan, another institute researcher, cautions that benchmarking in robotics is far more challenging than with multimodal or language models. Reproducing results can be highly time-consuming and requires identical hardware and setups, resources usually only available to the original model’s creator. Therefore, Ai2 invested time in simulation benchmarks.

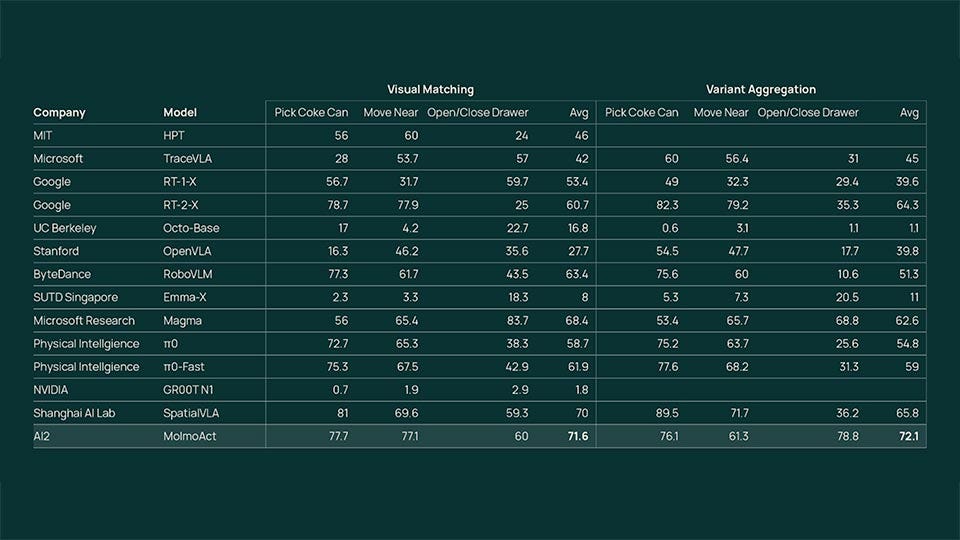

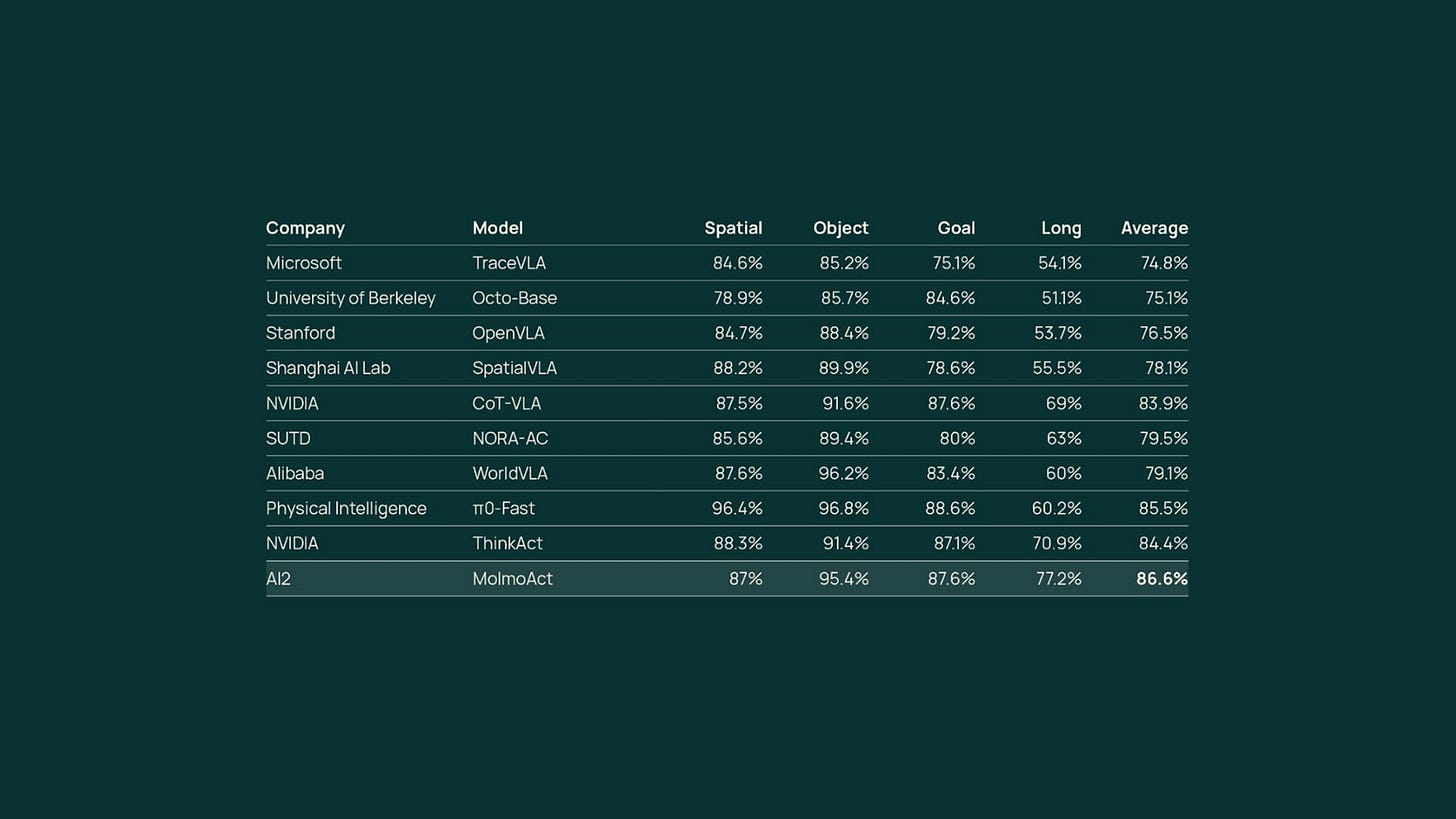

When it comes to pre-training capabilities, MolmoAct was evaluated through SimplerEnv and reportedly achieved an out-of-distribution task success rate of 72.1 percent, besting models from Physical Intelligence, Google, Microsoft, and Nvidia. As for evaluating knowledge transfer in multitask and lifelong robot learning problems (the LIBERO simulation), Ai2 claims MolmoAct outperforms Microsoft, Physical Intelligence, Nvidia, Stanford, Alibaba, and the University of Berkeley.

The New Robotic Frontier: Your Home

While much of the robotic AI industry focuses on industrial or enterprise applications, Ai2 is concentrating on the home, training MolmoAct on tasks in the kitchen, bedroom, bathroom, and living room. The home is unstructured and unpredictable compared to factories and warehouses, making it an ideal testing ground for developing adaptive, general robotic intelligence.

Ai2’s decision to collect its own data is deliberate. Jason Lee, a researcher on MolmoAct, claims that the largest open-source dataset available to roboticists—the Open X Embodiment project—falls short of its requirements. He cautions, though, that MolmoAct’s dataset is “still a raw robot action data” and doesn’t incorporate any reasoning. “They just take in an input observation as well as language instruction, and then train a robot to output this robot action command.”

Krishna notes that some of MolmoAct’s abilities could translate from household to industrial settings, but the transfer may not be seamless. A home environment is very different from the tightly packed containers of a warehouse, requiring additional data gathering to handle those tasks effectively.

“We want these robots to be able to navigate in unstructured environments, places that they’ve never seen before. We want them to be able to pick up and use objects that they’ve never seen before, and we want them to have this sort of world knowledge, to be able to do all of that, to be able to plan and do completely new tasks that they’ve never been asked to do, and to be able to get there. These are the kinds of similarities that they’re going to share with household tasks,” he shares. “So it’s a good proxy to evaluate. Of course, we don’t expect these robots and these models to be used directly in household environments. Today, we’re really making a bet for something that’s multiple years down the line, and this is our first version of the model for that future.”

Although the first-generation version of MolmoAct comes with 7 billion parameters, it’s not small enough to run locally on machines. During brief demonstrations, there were short delays between robot actions. Krishna states this is an artificially set time in which the robot does something, decides, and then replans. If you want a robot to clean up all those LEGOs scattered around your living room floor, don’t expect it to be a quick process. “For right now, we are focusing [on] the tasks that take a longer time, and you just want to leave the robot there overnight,” Duan explains.

But speeding up a robot’s response isn’t as much of a research problem as it is an engineering one, Krishna interjects. His team aims to assist robots in understanding the world, determining the best actions to take, and executing them with precision. “So things like distilling into a smaller model [and] getting things to run a lot faster—those are things we’re doing, but they’re not limiting the sort of capabilities of this model.”

As with most of its inventions, Ai2 expects MolmoAct to serve as a foundation for researchers, whether to refine their existing robotic models or train them on new tasks and capabilities. The institute wouldn’t be surprised if the technology found its way into commercial products—when asked if Roombas could clean rooms better if they were powered by MolmoAct, for example, Krishna didn’t push back.

Ai2 is already holding discussions with search-and-rescue groups with the goal of better understanding “unstructured sort of wreckages.” And it’s feasible that MolmoAct could help the robots used in space exploration missions.

Krishna stresses that Ai2 isn’t currently targeting the factory or manufacturing line of work. There are many companies already in this space with huge investments. Krishna’s team is passing that noise and instead is “focused on the research of getting to the next horizon.”

In Pursuit of the ‘Robot Brain'

For many, the promise of robotics is to have a machine in the home tackling those mundane chores we don’t really want to do. The internet is filled with creative demo videos hyping this dream. But Duan thinks we are far from that being reality, and what we’re seeing now is the result of tele-operations.

“I think we are missing the idea of a robot brain,” he opines. “Industry robots have already been around for a period of time, and that drives the whole industry revolution and progress in manufacturing. But a lot of these industry robots are specialized agents. They are programmed to do a really specific task. They are not able to adapt or be refined for any downstream task that you want to do beyond the task that it was designed for. So a robot brain is an idea whereby you have a model that’s able to generalize in new environments, manipulate new objects, do new tasks, and also to have new behaviors. The problem is that we are not there yet.”

Is the quest to develop the “robot brain” akin to artificial general intelligence (AGI)? Duan acknowledges the comparison, calling it the “next frontier” while adding that it’s a space people are already moving towards. He thinks that the talk of the town in 2026 will be about Embodied AI, thanks to this explosion of data that can be used to engrave brains into machines.

“We’ve started seeing signs of this already,” Krishna adds. “There are so many startups that are forming around robotics now. There are also a ton of corporations that are investing in R&D labs for robotics…We’re also seeing a lot of hardware companies come out. A lot more robot form factors now exist than they used to, even a year ago. So it does seem like a lot of people are betting on it.”

MolmoAct isn’t Ai2’s first robot-centric LLM. It has previously released other models, such as THOR, an environment that generates data from synthetic worlds that can be used to train robotic policies. Ai2 has plans to expand the model in the future and expects it will be used to train the next generation of MolmoAct.

You can find the MolmoAct model, its artifacts, and the technical report on Ai2’s GitHub and Hugging Face repositories.